pyspark when otherwise|pyspark if else statement : Tuguegarao you can also use from pyspark.sql.functions import col F.when(col("col-1")>0.0) & (col("col-2")>0.0), 1).otherwise(0) Resultado da Joy batendo uma siririca. 3,749 mulheres batendo siririca FREE videos found on XVIDEOS for this search.

0 · when otherwise in pyspark dataframe

1 · pyspark when with multiple conditions

2 · pyspark when otherwise multiple conditions

3 · pyspark when and otherwise example

4 · pyspark join with multiple conditions

5 · pyspark if else statement

6 · pyspark case when multiple conditions

7 · f is not defined pyspark

8 · More

Resultado da The Suicide Squad. 2021 | Maturity Rating: R | 2h 12m | Action. A dysfunctional squad of supervillains infiltrates a remote island on a mission to destroy a secret government experiment. .

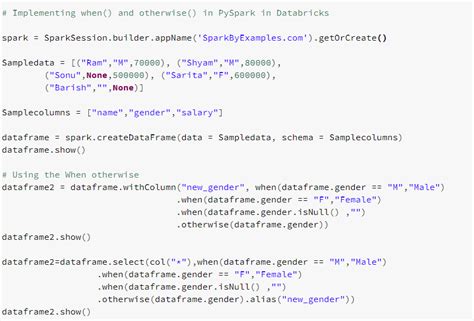

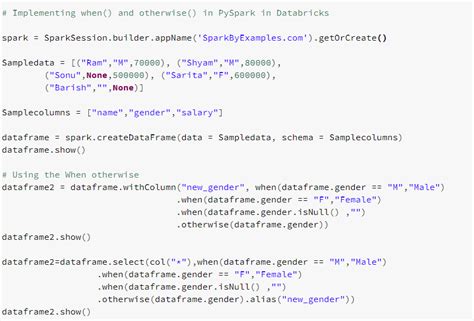

pyspark when otherwise*******Learn how to use PySpark when() and otherwise() functions and SQL case when expression to check multiple conditions and return values on DataFrame. See examples with syntax, code snippets and output. See more

If you have a SQL background you might have familiar with Case When statementthat is used to execute a sequence of conditions and returns a value when the first condition met, similar to SWITH and IF THEN ELSE statements. Similarly, PySpark . See moreWe often need to check with multiple conditions, below is an example of using PySpark When Otherwise with multiple conditions by using . See more Learn how to use PySpark's when and otherwise functions to create new columns based on specified conditions. See examples of basic, chained, nested, and .

you can also use from pyspark.sql.functions import col F.when(col("col-1")>0.0) & (col("col-2")>0.0), 1).otherwise(0)

Column.otherwise(value: Any) → pyspark.sql.column.Column [source] ¶. Evaluates a list of conditions and returns one of multiple possible result expressions. If .pyspark when otherwise pyspark if else statement Learn how to use the PySpark when function with multiple conditions to filter and transform data. See examples of basic, chained, nested, and otherwise .

Introduction to PySpark’s “when” Function. Creating a New Column with a Condition. Multiple Conditions with “when” and “otherwise” Using “when” for String Data. Handling .pyspark.sql.functions.when (condition, value) [source] ¶ Evaluates a list of conditions and returns one of multiple possible result expressions. If pyspark.sql.Column.otherwise() is .

Learn how to use pyspark.sql.functions.when and pyspark.sql.Column.otherwise to implement CASE WHEN clause in Spark SQL. See .

pyspark when otherwise Learn how to use pyspark.sql.functions.when and pyspark.sql.Column.otherwise to implement CASE WHEN clause in Spark SQL. See .

Column.when(condition, value) [source] ¶. Evaluates a list of conditions and returns one of multiple possible result expressions. If Column.otherwise() is not invoked, None is .

Let us understand how to perform conditional operations using CASE and WHEN in Spark. CASE and WHEN is typically used to apply transformations based up on conditions. We . In Spark SQL, CASE WHEN clause can be used to evaluate a list of conditions and to return one of the multiple results for each column. The same can be implemented directly using pyspark.sql.functions.when and pyspark.sql.Column.otherwise functions. If otherwise is not used together with when, None will be returned for . Understanding PySpark “when” and “otherwise”. In PySpark, the “when” function is used to evaluate a column’s value against specified conditions. It is very similar to SQL’s “CASE WHEN” or Python’s “if-elif-else” expressions. You can think of “when” as a way to create a new column in a DataFrame based on certain .

Tags: expr, otherwise, spark case when, spark switch statement, spark when otherwise, spark.createDataFrame, when, withColumn. LOGIN for Tutorial Menu. Like SQL "case when" statement and Swith statement from popular programming languages, Spark SQL Dataframe also supports similar syntax using "when otherwise" .

when in pyspark multiple conditions can be built using &(for and) and | (for or). Note:In pyspark t is important to enclose every expressions within parenthesis () that combine to form the condition As I mentioned in the comments, the issue is a type mismatch. You need to convert the boolean column to a string before doing the comparison. Finally, you need to cast the column to a string in the otherwise() as well (you can't have mixed types in a column).. Your code is easy to modify to get the correct output:20. The withColumn function in pyspark enables you to make a new variable with conditions, add in the when and otherwise functions and you have a properly working if then else structure. For all of this you would need to import the sparksql functions, as you will see that the following bit of code will not work without the col () function. Solution: Always use parentheses to explicitly define the order of operations in complex conditions.; Conclusion. In this blog post, we have explored how to use the PySpark when function with multiple conditions to efficiently filter and transform data. We have seen how to use the and and or operators to combine conditions, and how to chain .

I have a udf function which takes the key and return the corresponding value from name_dict. from pyspark.sql import * from pyspark.sql.functions import udf, when, col name_dict = {'James': "

I am trying to use a "chained when" function. In other words, I'd like to get more than two outputs. I tried using the same logic of the concatenate IF function in Excel: df.withColumn("device.

2. It's much easier to programmatically generate full condition, instead of applying it one by one. The withColumn is well known for its bad performance when there is a big number of its usage. The simplest way will be to define a mapping and generate condition from it, like this: dates = {"XXX Janvier 2020":"XXX0120",

Adding slightly more context: you'll need from pyspark.sql.functions import when for this. – Sarah Messer. Commented Jul 6, . Using “when otherwise” on DataFrame. Replace the value of gender with new value. val df1 = df.withColumn("new_gender", when(col("gender") === "M","Male") .when .

Sample program – Multiple checks. We can check multiple conditions using when otherwise as like below. import findspark. findspark.init() from pyspark import SparkContext,SparkConf. from pyspark.sql import Row. from pyspark.sql.functions import *. sc=SparkContext.getOrCreate() #creating dataframe with three records.We can use CASE and WHEN similar to SQL using expr or selectExpr. If we want to use APIs, Spark provides functions such as when and otherwise. when is available as part of pyspark.sql.functions. On top of column type that is generated using when we should be able to invoke otherwise.

Execution plan has to be always evaluated, otherwise Spark wouldn't know how to generate corresponding code. From the other hand, different branches of evaluation can be either pruned from the plan or skipped during execution (using standard control flow). In your case plan is simply invalid, as None cannot be used as literal. I am trying to check multiple column values in when and otherwise condition if they are 0 or not. We have spark dataframe having columns from 1 to 11 and need to check their values. . How to use the when statement in pyspark with two dataframes. 1. When condition in Pyspark dataframe. 2. In PySpark DataFrame use when().otherwise() SQL functions to find out if a column has an empty value and use withColumn() transformation to replace a value of an existing column. In this article, I will explain how to replace an empty value with None/null on a single column, all columns selected a list of columns of DataFrame with Python . Recipe Objective - Learn about when and otherwise in PySpark. Apache PySpark helps interfacing with the Resilient Distributed Datasets (RDDs) in Apache Spark and Python. This has been achieved by taking advantage of the Py4j library. PySparkSQL is the PySpark library developed to apply SQL-like analysis on a massive amount of .Logical operations on PySpark columns use the bitwise operators: & for and. | for or. ~ for not. When combining these with comparison operators such as <, parenthesis are often needed. In your case, the correct statement is: import pyspark.sql.functions as F. df = df.withColumn('trueVal',

WEBAmadores Brasileiros, Bareback, Moleques novinhos, Novinho ivo, Novinho roludo, Perdendo cabaço, Vídeos Tags: moleque , novinho , pau duro latejando , perdeu .

pyspark when otherwise|pyspark if else statement